[This article was originally published in the OTT Annual Review 2019-2020: think tanks and technology on March 2020.]+

In the think tank world, talk about artificial intelligence (AI) is common. Using it is less common. One of the underlying causes of this may be a perceived lack of familiarity with the methods. However, AI methods – including machine learning – are probably more familiar to many thinktankers than they realise. The Russian Propaganda Barometer project, recently conducted by the Caucasus Research Resource Center Georgia (CRRC-Georgia), demonstrates the potential of these tools for policy insight – particularly relating to discourse analysis, and developing targeting strategies.

AI and machine learning are more familiar than thinktankers think

To say that AI in general, and machine learning algorithms specifically, is a dramatically changing industry would be an understatement. From optimising electricity usage in factories to deciding which advertisement to show you online, algorithms are in use all around us. In fact, algorithms have been shaping the world around us for decades.

The think tank and social science worlds are no exceptions to this. Indeed, most policy researchers will be familiar with, if not users of, algorithms like regression. Notably, this is a common tool in the machine learning world as well as social science research.

Hopefully, knowing that regression is part of the machine learning toolbox will make it clear that machine learning is less foreign than many thinktankers may think.

While regression is one method in the machine learning toolbox, there are others. Although these methods are not new, this larger toolbox has only become commonly used in recent years as big data sets have become more available.

For many products and problems, machine learning solutions might be improvements on existing think tank practices. This is particularly true when it comes to developing a targeting strategy for programming, monitoring, or anything that focuses on understanding discourses.

The Russian Propaganda Barometer project

CRRC Georgia implemented the Russian Propaganda Barometer project, funded by USAID through the East West Management Institute, in 2018-2019. The project aimed to understand and monitor sources of Russian propaganda in Georgia, and to identify who was more or less likely to be vulnerable to the propaganda.

CRRC took all the potential sources of Russian propaganda (in Georgian) on public Facebook pages. These pages had been identified by two other organisations that were also working on the issue. CRRC identified further pages that were missing from the two organisations’ lists. These posts were then analysed using natural language processing tools such as sentiment analysis. Network analysis was also conducted to understand the interlinkages between different sources.

One of the key insights from the project is that most of the identified sources of propaganda were in fact from far right organisations. However, an analysis of how they talked about the West and Russia suggests that most actually have more negative attitudes towards Russia than the West.

The analysis also called attention to the sharp rise in interest in the far right in Georgia. The number of interactions with far-right pages had increased by roughly 800% since 2015. While overall increasing internet use in the country likely contributed to this, it seems unlikely to be the only cause of the rise.

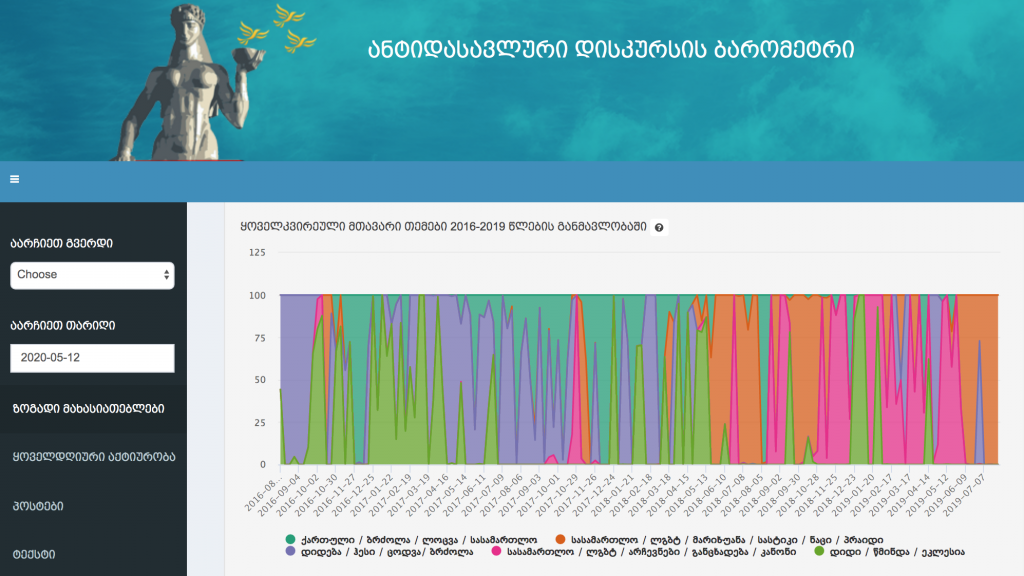

The results were presented in this dashboard, as well as a more traditional report. The dashboard enables users to see what the far right is talking about on a daily basis and networks between different groups, among other metrics.

The project also aimed to inform a targeting strategy on countering anti-Western propaganda. To do so, we merged data from approximately 30 waves of CRRC and National Democratic Institute surveys that asked about a variety of preferences.

From there, a ‘k-nearest neighbours’ algorithm was used to identify which groups had uncertain or inchoate foreign policy preferences. The algorithm identifies how similar people are, based on the variables included. This led to an algorithm that provided accurate predictions about two thirds of the time as to whether someone would be more or less likely to be influenced by Russian propaganda. Further research showed that the algorithm was stable in predicting whether someone was at risk of being influenced, using data that did not exist at the time of the algorithm’s creation.

The data analysis, while cutting edge in many respects, is not beyond the means of many quantitative researchers. Neither of us have MAs or PhDs in statistics: David is a geographer and Dustin is a political scientist.

While the Russian Propaganda Barometer addressed the research goals, we’d like to highlight that AI is no panacea. For the project’s success, we combined traditional think tank analysis of the situation in Georgia with AI to generate new insights.

The Russian Propaganda Barometer project is just one type of application of machine learning to policy research. There is good reason to believe more and more policy researchers will use these methods given their ubiquity in the modern world, together with the increasing availability of the large datasets needed to study these issues. We hope that this project can serve as food for thought for others in service of this goal.

Previous

Previous