As if think tanks didn’t already have enough to contend with – working in environments where many are finding it increasingly difficult to operate, growing pessimism over political contexts, and persistent financial woes – along comes artificial intelligence (AI) to bring new uncertainties!

In order to understand how think tank communicators are beginning to navigate these uncertainties, WonkComms undertook a survey to provide a snapshot of the attitudes, approaches and practices in the sector. The aim was to understand how the technology is being used and how it features in people’s day-to-day working lives.

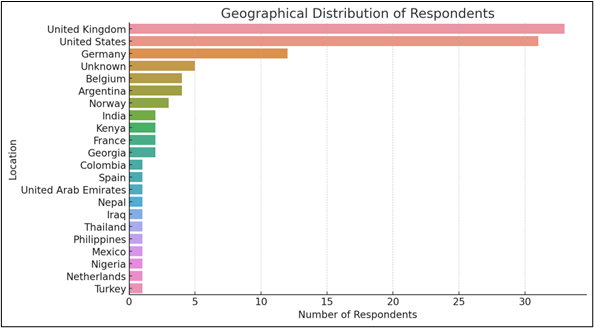

The survey was conducted between February and April 2024 and was completed by 111 respondents, from 21 countries. It was carried out with the support of Cast from Clay, On Think Tanks, Parsons TKO, the R Street Institute, Smart Thinking, Soapbox, Sociopublico, and the Wuppertal Institute.

Our survey showed that think tank communicators are already using AI; however, if think tanks are to avoid reputational and other risks, they need to address two clear and present AI-related dangers: the lack of organisational support and training.

Overall snapshot of AI in think tanks

The survey results presented a mixed picture of AI adoption in think tanks: we saw high levels of AI usage combined with high hopes for productivity, a wide range of different fears about how AI could impact people and society, and a desire for more support for staff from think tank employers.

These findings are not exceptional as they reflect similar surveys in many sectors, offering some reassurance that think tank communicators are not alone in their experiences.

However, two immediate and obvious challenges have emerged, both focusing on how think tanks can help their communications staff to use AI safely and effectively:

1. In a sector where credibility is key, high levels of AI usage combined with a lack of training is a risk.

This needs to be addressed to better meet and understand staff needs and to exploit productivity efficiencies – for example, identifying the right tools for given tasks – without endangering organisational reputations.

2. The lack of focus on organisational policies combined with low levels of collaboration within the sector hints at a missed opportunity.

Think tanks and the think tank sector are not focusing enough on AI’s impact and potential. They need to consider how they can develop more enabling AI environments for their staff by creating safe spaces for experimentation; clear operational principles; and open, collaborative cultures.

Six key findings

1. Many thinktankers are already AI users

In total, 90% of our respondents told us that they use AI at work: writing, editing and transcribing emerged as the top uses.

Among the respondents, 75% were in the early stages of their AI journey and have limited experience with the technology, using it only occasionally for specific tasks. However, only 22% professed to regularly or extensively use AI as part of their working lives.

More specifically, the survey showed that communications teams are using AI for a range of tasks, including social media posts (43%); editing (41%); brainstorming/filling a ‘blank page’ (36%); background research (31%); and creating blogs/articles (25%).

In addition, 26% said that research teams in their organisations were using AI in their work, as were human resources/staff support functions (20%).

2. Thinktankers need and want AI training

Overall, 95% of our respondents had high hopes that AI will increase their productivity. They’ve heard the AI hype and want it to help them/the industry.

That said, they cited the need for support to help them deliver efficiencies (43%) and expressed a desire for training and online learning (58% and 55%, respectively) to continue their learning journey.

This chimes with surveys conducted within other sectors, where the need for reskilling and up-skilling has been a common finding.

The latest figures show that bosses across many sectors believe that 40% of their workforce will need to retrain within the next five years due to the impact of AI, while 60% of the workforce itself believes that they will need to retrain (survey by Oliver Wyman Forum).

3. Thinktankers have shared concerns about AI

Other respondents are more cautious about the impact of AI and how they use it, with a variety of different concerns being expressed, including the following:

- It will hasten the loss of human interaction (48%).

- It will be a threat to privacy (46%).

- It will bring an overwhelming pace of change (42%).

- It will perpetuate biases and create disparities (41%).

Because AI usage numbers are so high (see finding 1) and are likely to grow in the coming years, it’s worth pausing to consider some of the implications of using AI in editing, creating articles and research.

This is particularly important as 25% of respondents reported using AI without disclosing it.

If thinktankers – be they in communications or research – produce AI-generated content without proper acknowledgement and are discovered to have done so, this would undermine the reputation of their research organisation.

Furthermore, when this finding is examined alongside external surveys, the risk of reputational damage becomes even greater. It’s been found that 60,000 academic articles that were published in 2023 used large language models (analysis by Andrew Gray). Moreover, 64% (survey by Salesforce) of the workforce has admitted to claiming generative AI work as their own.

If this trend grows and spreads, the risk to the credibility of a sector built on rigorous, independent analysis could be significant, even existential, justifying the concerns of our respondents.

4. Thinktankers aren’t too concerned about AI’s impact on their jobs

Despite the desire for training, only 20% of the respondents expressed a fear that AI might put them out of a job.

Considering how much AI’s impact on jobs dominates headlines, this might seem a surprising number. Especially given that other non-think-tank surveys have found that 60% of their respondents feared that AI might endanger their job (surveys by Oliver Wyman Forum and IMF).

It isn’t clear why this concern is so much less prevalent within the think tank sector – perhaps thinktankers are confident in the value of their analyses, their ability to understand and explain policy nuance, and the deep personal networks and relationships they are able to develop.

5. Think tanks aren’t prepared for AI

In our WonkComms survey, 70% said that their organisation was not prepared or only somewhat prepared for AI adoption.

Policies are clearly an important part of enabling safer AI usage in think tanks. But surveys from other sectors sound a note of caution for the 28% of think tanks developing them: don’t just try to restrict AI use. The wide availability and easy access to AI tools can mean that more restrictive approaches don’t work.

For example, in a Salesforce survey of workers in many different sectors, a large percentage of respondents admitted to using unapproved and banned AI tools at work (55% and 40%, respectively). Restrictions, therefore, can actually increase legal and security risks to think tanks.

6. Thinktankers want to connect and share information on AI

A desire to learn from others also featured prominently in our survey, which was possibly fuelled by the aforementioned concerns.

In total, 55% stated that they would like to see evidence of how AI is being used by other organisations in the sector. A significant number also wanted to talk to their peers about AI usage (43%), or to AI experts/consultants (40%).

There was one practical outcome from the survey: DGAP used the survey questions as the basis of an internal exercise to gauge how their staff are using AI and their attitudes towards it.

They’ll use these findings and the process to develop a list of secure and safe AI tools, alongside a code of conduct for their use.

Telling the survey’s story using AI avatars

The survey results were presented at the On Think Tanks conference in Barcelona, May 2024.

To avoid a number-heavy presentation, we decided to see what we could get AI to produce:

- We loaded the survey data into ChatGPT (the survey was anonymous, so there were no risks of private data being used to train AI models).

- We asked ChatGPT to analyse the results and create a script for two talking head personas, which represented some common traits in people’s survey responses. Two scripts were created: one for a think tank communicator from the United Kingdom and another from the United States, our two most represented countries.

- Knowing that generative AI is great at bias, we asked it to increase the stereotypes and create a second version of the scripts.

- We then used Synthesia.io to create video avatars.

Below, we describe our WonkComms avatars, Alex and Emma. They each told stories that were really important in our findings.

Emma

Emma represented the largest group of respondents: people starting an AI journey with high hopes and high support needs.

These are people in the early stages of experimentation, with a focus on work. They are hopeful about AI’s potential, but need support to understand how AI can help them.

How would they like to get that support? Through training and through support from their organisations (who they don’t see as prepared for AI).

Watch Emma, here.

Alex

Alex represented the views of many of the respondents who were cautious about AI. They have lots of fears about the potential for AI to “lose the human touch”, to impact privacy and data security, to bring ever-faster change, or to do harm through biased data and responses.

Even though they’re cautious, they’re aware of the potential use of AI within their think tanks – in communications teams, research teams and beyond.

More than anything, they’d love to connect and share use cases and ideas with peers in other think tanks.

Watch Alex here.

Previous

Previous